Worst Practices – Part One

A catalog of wince-inducing practices to be avoided for the OpenEdge or, indeed any, database.

We Won’t Need That!

“Oops…”

Do not EVER destroy your production database, even if it is badly damaged.

In a recovery or migration situation it can sometimes be tempting to overwrite your database in order to conserve disk space but this is a false economy. For instance, if you are restoring because of corruption and your backups are also corrupted, you have now made the problem worse! Your corrupt database is now not only corrupt but days behind!

Always restore backups to a fresh location and NEVER restore on top of your existing production database. Always build a new database.

We recently had a situation where someone inadvertently deleted the before-image file during application maintenance. The naive solution would be to restore on top of that database. After all, the db won’t start when the bi file is missing so it is useless right? Well, maybe. But what if the backup was also mysteriously damaged? Or perhaps the missing bi file was just the tip of the iceberg and the real problem is messed up metadata in the directory? What if there are otherwise unrecoverable bits of data in the damaged copy of database?

Rather than restore on top of the existing database, we renamed the directories and created a new structure in the old path (by copying the old structure file). In this way we were able to 1) preserve the old database for analysis and 2) retain an option for last-ditch recovery. Alternatively we could have left the existing directories with the same paths, restored somewhere else and then changed all of the application scripts and other configuration data – that’s a pain and there is a lot of risk that you will miss something that way. Renaming the database directories was simple and easy. But it took a bit of pre-planning to arrange that. Key pre-planning points:

- Lots of disk space was available (see below)

- The directories containing db, bi, and ai extents are at least one level below the mount point. i.e. /db/live/dbname rather than /db/dbname (where /db is the mount point)

- The structure file is kept up to date via cron or a scheduled task or similar process

If there is corruption on disk (maybe there was an intermittent bad block) deleting the existing database might put that defective disk block right back into the storage pool. And your newly restored database will reuse it. Likely in a different location. By keeping the db in place you don’t allow that to happen and you can analyze the original problem at a more leisurely pace.

When migrating or re-organizing, always defer production database retirement until its replacement has been verified and put into use. It may be your last resort if something goes wrong. If you leave the original database untouched your rollback plan is simple – throw away the failed target and continue to use the original database.

This is why we suggest having at least 5-7 times the size of the database on hand for emergencies! We’re not crazy. We’re experienced:

- The original and potentially corrupt database

- Last night’s backup

- An earlier backup

- Space to restore the backup

- Space to dump the data

- Space to build a new database

- Scratch space

Mirror Mirror…

“What Could Go Wrong?”

Mirrors and disk replication can play an important role in improving resiliency. They are great for lots of things but they are not a complete solution and you cannot use them to replace backups, roll-forward recovery and real-time database replication.

Replication faithfully produces 2 (or more) copies of everything, even when you don’t want it too. The firmware might fail, or the SAN could just fall through the floor, or a sysadmin might accidentally “rm -rf” (yes, all of these things and more have happened to customers!)

Then there is the always popular:

for each customer:

delete customer.

end.

Was that 4GL editor session in the dev database or the production database? (Human error is responsible for more disasters than hardware failure.)

No matter how much you trust your SAN and vSAN and vThis and vThat, all databases that have business value must have after-imaging enabled, and the AI files must be continuously archived to a safe location. “Safe” usually means a different time zone but at the very least it should be a different building/data centre. It doesn’t do you any good to have your “off-site” archive in the same building as the primary server when the building burns to the ground. Or gets flooded. Or when thieves break in and steal everything (yes, that really happens).

Ideally, after-image logs should be continuously rolled forward against an off-site backup database to validate that both the backup and the AI mechanism are working correctly.

Faith-Based Backups

“Our backups are good, why worry?”

Backups aren’t just for last night. Tapes degrade over time and backup technologies go obsolete quickly. Old backups can be just as dangerous as no backups (think lawsuits and “e-discovery”, or tapes falling off trucks). The only known-to-be-good backup is one which has been restored and verified.

This means that you should test your backups and log the results of all your tests. Test your ability to restore and roll forward, and test your recovery plan at least annually.

On that note, do you know what the business’ expectations are when it comes to restoring old backups? We often hear that businesses need to keep 7 years of data for legal purposes, but does this mean that the CFO expects you to be able to restore a database to exactly April 7th, 2016 @ 14:05:48-05:00!?! And do you still have a tape drive and the necessary software to read that tape from 2016? (We have seen situations where the tapes were available from cold storage but the hardware to read them no longer existed.) Do you have the 7 year old release of OpenEdge that made that backup?

Great Expectations

“Go ahead, it will work.”

I always shake my head when people tell me they are going to execute some task but a) have never tested it; and b) have no idea how long it’s going to take. Oh…and c) don’t know how to rollback if for some <gasp!> unexpected reason, the process fails.

Whatever you are planning to do, test it first: it might take 3 days to complete … or lock a billion or so records … or consume all of the machine’s resources … or break code … Or break 3rd party systems.

Once upon a time a new DBA (no, this wasn’t me) was reading the documentation (that’s usually a good thing!) and came across the index rebuild command. Having heard complaints about performance and reading how this could possibly improve performance he decided to give it a try on the weekend (this was in the days when being down on Sunday was normal for a lot of people). On Monday morning it was still chugging away… it wasn’t until the wee small hours of Tuesday that it finished. The users did not feel like Monday’s performance was improved. History does not record what they thought on Tuesday.

This is why old DBAs are paranoid DBAs. New DBAs tend to be fearless, and learning from someone else’s experience can help instill some much needed paranoia.

Create a “sandbox” test environment that closely mimics the production system. Require written plans, with a backout plan, and tested, repeatable scripts for everything. Log everything. Practice, practice, practice.

We like to say that “Go live is not the time to think. It’s the time to execute your well rehearsed plan.” There are plenty of opportunities for surprises without you forgetting a simple step like “proutil prod -C enablelargefiles”.

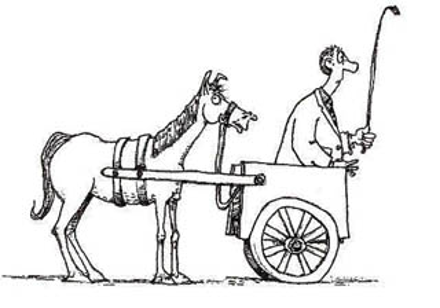

The Cart Before the Horse

“It’s slow? Let’s go buy something!”

Hey Tom! We’ve purchased a new Acme ZuperWave 47X server with 128 cores and 4 TB of RAM! How should we configure Progress to run on it?

Not so fast…

Before you buy anything, figure out the best way to invest your money and consult with experts (that’s us) before you make expensive decisions. Plan first, then spend your money. We have seen, on more than one occasion, multi-million dollar purchases that were not at all suitable for an RDBMS environment.

Hint: the more buzzwords (hyper…virtual…auto…) coming out of the sales rep’s mouth, the less likely it is suited for any relational database environment. Your mission critical database has very different requirements from a web server or a mail server or most of the other systems in your infrastructure (or, these days, someone’s cloud infrastructure). Cookie cutter solutions won’t work well and bigger and more expensive is NOT always better. There are plenty of cost effective ways to get excellent performance and reliability but you do have spend some effort up front to understand the trade-offs.

Things to watch out for:

- Shared anything (virtualization, SAN, “hyper converged”, cloud)

- Lots of cores (very few OpenEdge databases need more than 8 cores)

I can hear the peanut gallery: “But my SAN is all flash.” Congratulations. Your flash memory is a many, many meters away at the end of a cable. The speed of light is one foot per nanosecond (or 3 nanoseconds per meter) and signals propagate through fiber at about 2/3 c. They also have to navigate their way through various switches as they traverse the fabric of your SAN infrastructure. Whereas that internal flash drive only has to send the data a couple of centimeters.

For more information about why we don’t like shared storage

It is possible that your system is small enough to not be bothered by sub-optimal choices. And sometimes you have to accept compromises for non-technical reasons. But you should learn about and understand the trade-offs that you are making.

The Laissez-Faire DBA

“The users will let us know when there is a problem”

Familiarize yourself with baseline performance so that you will recognize exceptions when they occur.

Collect historical statistics to facilitate both forward planning (trending) and forensic performance analysis.

Implement availability and performance monitoring systems so that issues are identified and resolved before they cause outages.

In other words…ProTop.

Don’t Hurt Yourself

I certainly hope that none of these apply to you, but if they do, consider yourself warned. Clean up your act, sweep a few of these practices under a rug, and get yourself ready for part two of our DBA Worst Practices!